Amazon has defended its facial-recognition tool, Rekognition, against claims of racial and gender bias, following a study published by the Massachusetts Institute of Technology.

The researchers compared tools from five companies, including Microsoft and IBM.

While none was 100% accurate, it found that Amazon’s Rekognition tool performed the worst when it came to recognising women with darker skin.

Amazon said the study was “misleading”.

The study found that Amazon had an error rate of 31% when identifying the gender of images of women with dark skin.

This compared with a 22.5% rate from Kairos, which offers a rival commercial product, and a 17% rate from IBM.

By contrast Amazon, Microsoft and Kairos all successfully identified images of light-skinned men 100% of the time.

The tools work by offering a probability score that they are correct in their assumption.

Facial-recognition tools are trained on huge datasets of hundreds of thousands of images.

But there is concern that many of these datasets are not sufficiently diverse to enable the algorithms to learn to correctly identify non-white faces.

Clients of Rekognition include a company that provides tools for US law enforcement, a genealogy service and a Japanese newspaper, according to the Amazon Web Services website.

Use with caution

In a blog post, Dr Matt Wood, general manager of artificial intelligence at AWS, highlighted several concerns about the study, including that it did not use the latest version of Rekognition.

He said the findings from MIT did not reflect Amazon’s own research, which had used 12,000 images of men and women of six different ethnicities.

“Across all ethnicities, we found no significant difference in accuracy with respect to gender classification,” he wrote.

He also said the company advised law enforcement to use machine-learning facial-recognition results when the certainty of the result was listed at 99% or higher only and never to use it as the sole source of identification.

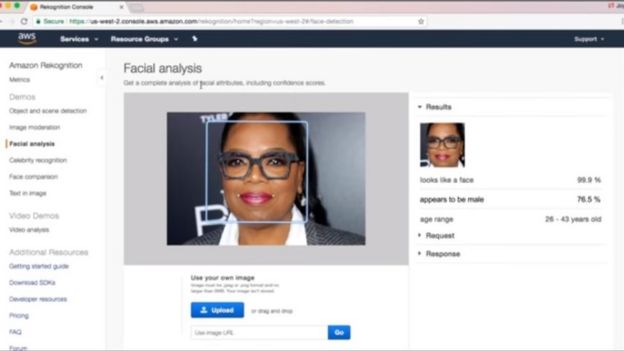

An earlier version of Amazon’s facial-recognition tool analysing an image of US TV presenter Oprah Winfrey and deciding she is 76.5% likely to be a man

An earlier version of Amazon’s facial-recognition tool analysing an image of US TV presenter Oprah Winfrey and deciding she is 76.5% likely to be a man

“Keep in mind that our benchmark is not very challenging. We have profile images of people looking straight into a camera. Real-world conditions are much harder,” said MIT researcher Joy Buolamwini in a Medium post responding to Dr Wood’s criticisms.

In an earlier YouTube video, published in June 2018, MIT researchers showed various facial-recognition tools, including Amazon’s, suggesting that the US TV presenter Oprah Winfrey was probably a man, based on a professional photograph of her.

“The main message is to check all systems that analyse human faces for any kind of bias. If you sell one system that has been shown to have bias on human faces, it is doubtful your other face-based products are also completely bias free,” Ms Buolamwini said.

Source: www.bbc.com